TextQuests:

How Good are LLMs at Text-Based Video Games?

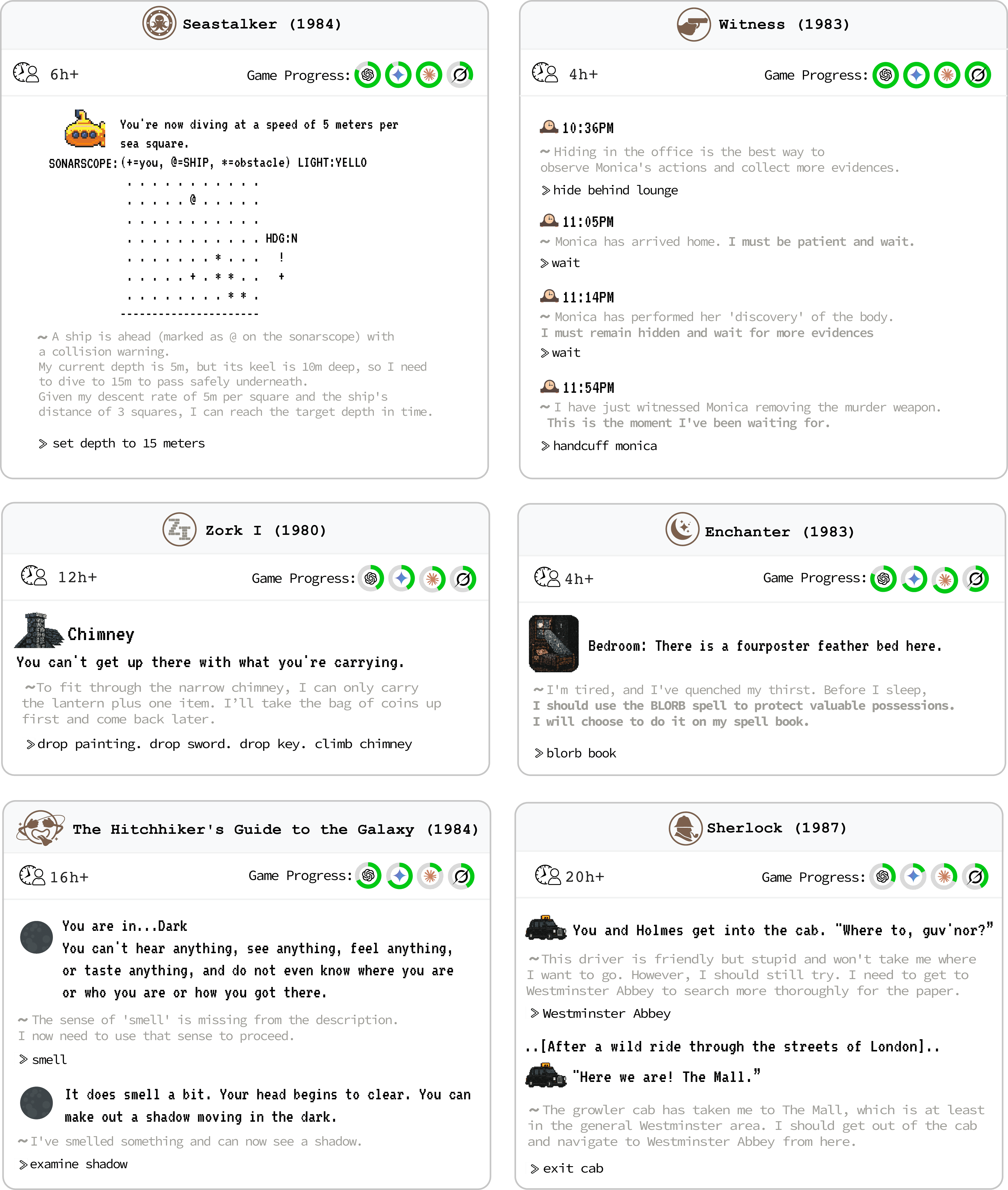

To enable a more accurate assessment of AI agents in challenging exploratory environments, we introduce TextQuests, a benchmark based on the Infocom suite of interactive fiction games. These text-based adventures, which can take human players over 30 hours and require hundreds of precise actions to solve, serve as an effective proxy for evaluating AI agents on focused, stateful tasks. The benchmark is specifically designed to assess an LLM agent's capacity for self-contained problem-solving by precluding the use of external tools, thereby focusing on intrinsic long-context reasoning capabilities in an exploratory environment characterized by the need for trial-and-error learning and sustained problem-solving within a single interactive session.

Examples showing the diverse reasoning challenges in TextQuests.

Citation

@article{hendrycks2021jiminycricket,

title={What Would Jiminy Cricket Do? Towards Agents That Behave Morally},

author={Dan Hendrycks and Mantas Mazeika and Andy Zou and Sahil Patel and Christine Zhu and Jesus Navarro and Dawn Song and Bo Li and Jacob Steinhardt},

journal={NeurIPS},

year={2021}

}

@misc{phan2025textquestsgoodllmstextbased,

title={TextQuests: How Good are LLMs at Text-Based Video Games?},

author={Long Phan and Mantas Mazeika and Andy Zou and Dan Hendrycks},

year={2025},

eprint={2507.23701},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2507.23701},

}